Senior Projects > Eye See What You See

The Eye See What You See project by William Ho, Christopher Hong, and Mihir Patel involves automatic eye detection and gaze tracking. The students implemented a system that can detect the eyes of multiple people standing several feet from a camera, and after a short calibration period, it can track their gazes. This project was supported by ITT Exelis, and it was one one of two projects presented by Cooper Union at ITT Exelis during the spring 2013 semester. This project came in first place at the 2013 RIT IEEE Student Design Contest.

Abstract from William's, Christopher's, and Mihir's final paper:

Gaze tracking systems can serve a wide range of applications - from improving advertising to allowing the handicapped to express themselves. However, the obtrusive nature and short range (less than one meter) of existing systems limit their practicality. This paper details a low-cost, real-time, unobtrusive gaze-tracking system capable of detecting multiple people at signi cant distances (two to three meters) from the camera. The system exploits the red-eye e ect, and utilizes SVM and Haar cascade classi ers to detect eye pairs. It then uses geometric approximations to determine the point of gaze for each pair. An interactive display, consisting of advertisements that change based on people's gazes, has been implemented.

Details:

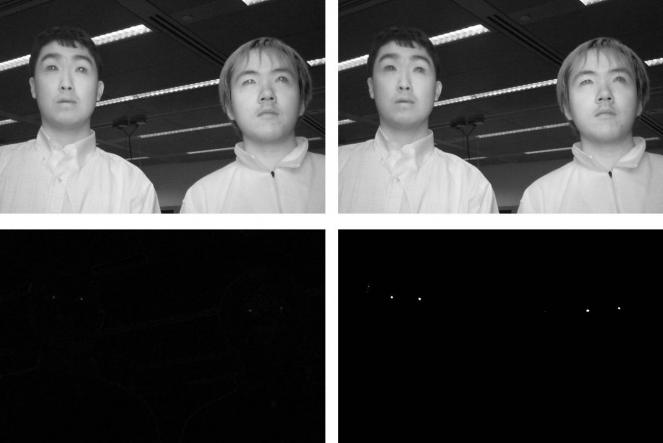

Most people are familiar with the "red-eye effect", which causes unrealistic red-tinted pupils in photographs. Typically this is something that people want to avoid, but this project creatively takes advantage of the red-eye effect to detect eyes in video in real-time. Using a standard web-cam and infrared LEDs that flash in a synchronized manner with the camera, the system produces video in which the red-eye effect is present (or not present) in every other frame. By subtracting adjacent frames, potential candidates for eyes are determined. This is followed by thresholding and multiple classification techniques to detect pairs of eyes. The image below shows two consecutive frames from a video (top row) and the difference image produced before and after thresholding (bottom row).

An evaluation of the eye detection component of the system revealed that no false postives occured in the inspected video clips (i.e., there were no cases in which the system classified non-eyes as eyes). Additionally, the majority of eyes present in the video were correctly detected, although the accuracy does fall with increasing distance as expected. The results are summarized in the chart below.

Distance | Number of Frames | Expected Eyes | Detected Eyes | Percent Detected |

3-4.5 ft | 1294 | 2088 | 1894 | 90.71% |

4.5-6 ft | 1228 | 1956 | 1606 | 82.11% |

6-7.5 ft | 1264 | 2028 | 1416 | 69.82% |

To implement gaze tracking, volunteers were asked to state at the center of a screen for a few seconds for a calibration period. The distance between the pupils could be used to determine the approximate distance of each person from the camera. The system would then track their eyes for a period of time and use basic geometry to determine the points of gaze. Various scenarios were implemented to evaluate the system. In one experiment, after the calibration period, the volunteers were asked to stare at one quadrant at a time. The system predicted which quadrant was being viewed. The accuracy of this experiment is shown below.

Quadrant | Number of Gaze Points | Correct Exp. Gaze Points | Percent Correct |

Top-left | 150 | 134 | 89.33% |

Bottom-left | 150 | 144 | 96.00% |

Top-right | 150 | 142 | 94.67% |

Bottom-right | 150 | 139 | 92.67% |

In another experiment, after the calibration period, a volunteer is asked to stare at an orange circle on a 47 inch flat screen TV located several feet from the viewer. The orange circle jumps to a new position every few seconds. The system tracks the gaze of the volunteer, represented by a blue circle. The accuracy of the gaze tracking was measured over several instantiations of the experiment, and the system predicts the point of gaze of the volunteer with an accuracy of about half a foot. One recorded instantation of this experiment can be seen below. Note that the orange circle represents where the viewer is asked to stare, and the blue circle represents the system's calculated prediction of the point of gaze. Also note that the system detects and highlights the viewer's eyes (and also nose). Further note that there is some movement in the background that does not hinder the system.

In a final experiment, after the calibration period, the flat screen displays ten posters, and two volunteers stare at various posters. The system determines their points of gaze and denotes the hits with orange circular highlights. When a given poster has attracted enough attention, it takes over the screen for a few seconds, and then the experiment repeats. One recorded instance of this experiment can be seen below. Note that at the bottom right of the video, you can see the two volunteers, and if you look closely, you can see blue circles representing the system's predictions of their points of gaze.